High performance SIEVE LRU cache proved correct with CsCheck SampleModelBased and SampleConcurrent

20 Feb 2024SIEVE is a new type of LRU cache. Due to its simplicity, it offers the chance to create a high performance concurrent cache.

The requirements for this cache are that it is fast, thread-safe and has an atomic GetOrAdd method. Atomic here means that the valueFactory will only be called once for a key. Neither ConcurrentDictionary or IMemoryCache offer this.

SampleModelBased and SampleConcurrent have been used to prove the implementation is correct. After some experimenting it became clear that the best way to satisfy the tests was to factor the code into a more generally usable GetOrAddAtomicAsync extension method and a thread-safe cache.

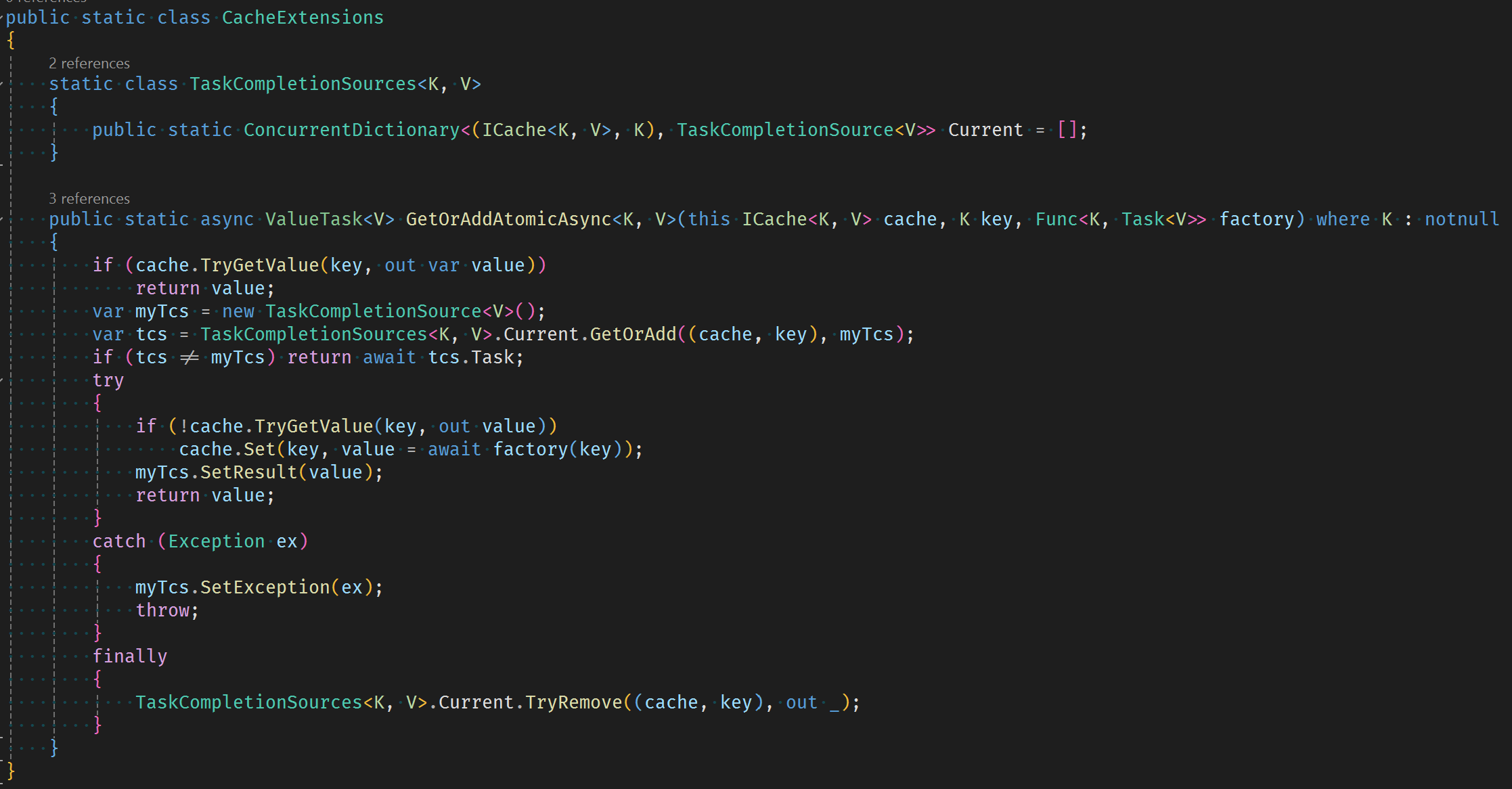

GetOrAddAtomicAsync

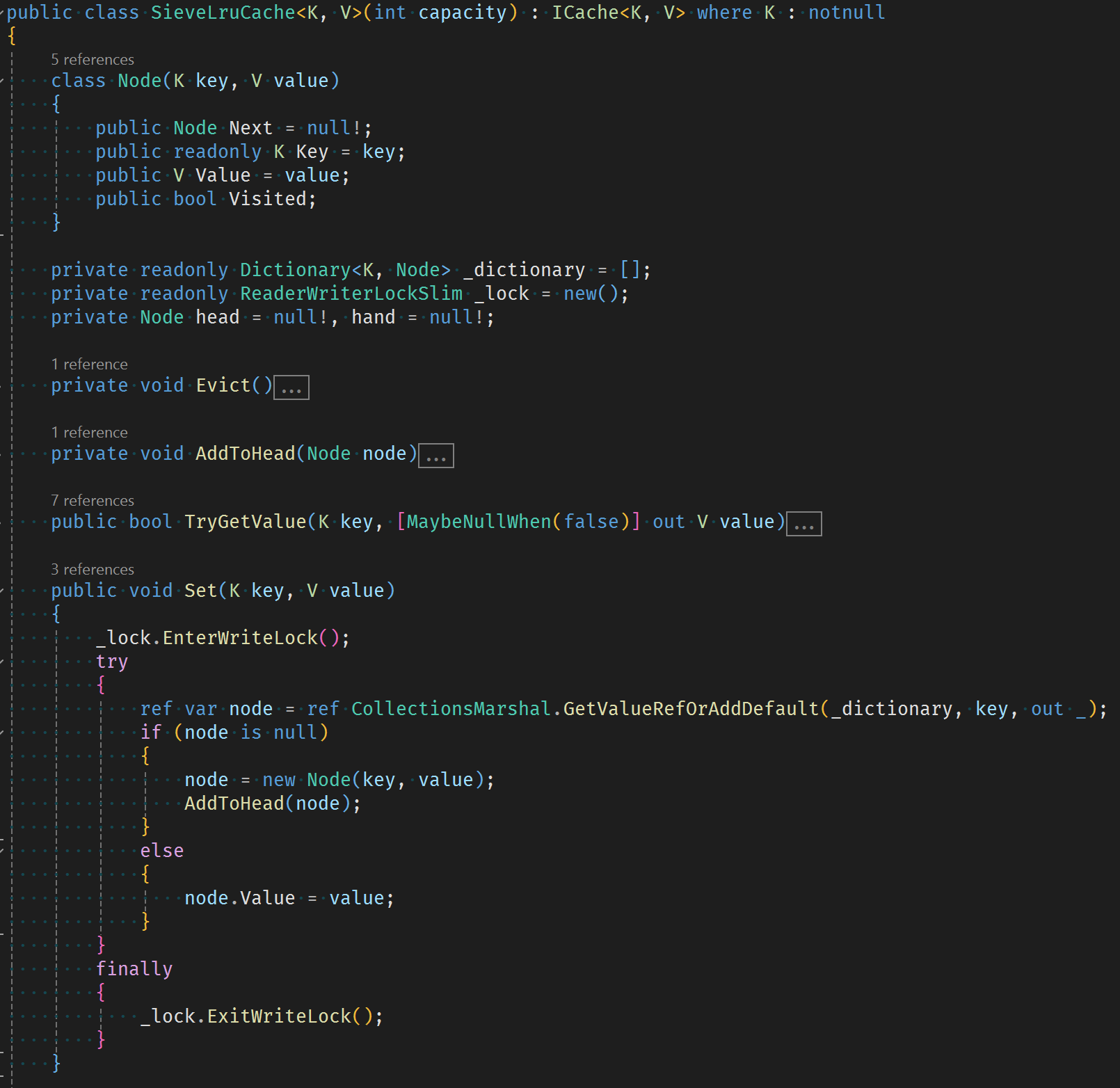

SieveLruCache

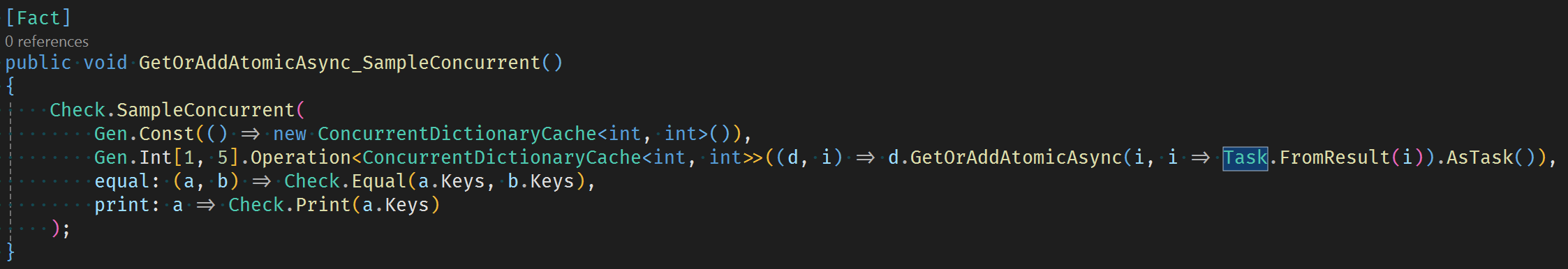

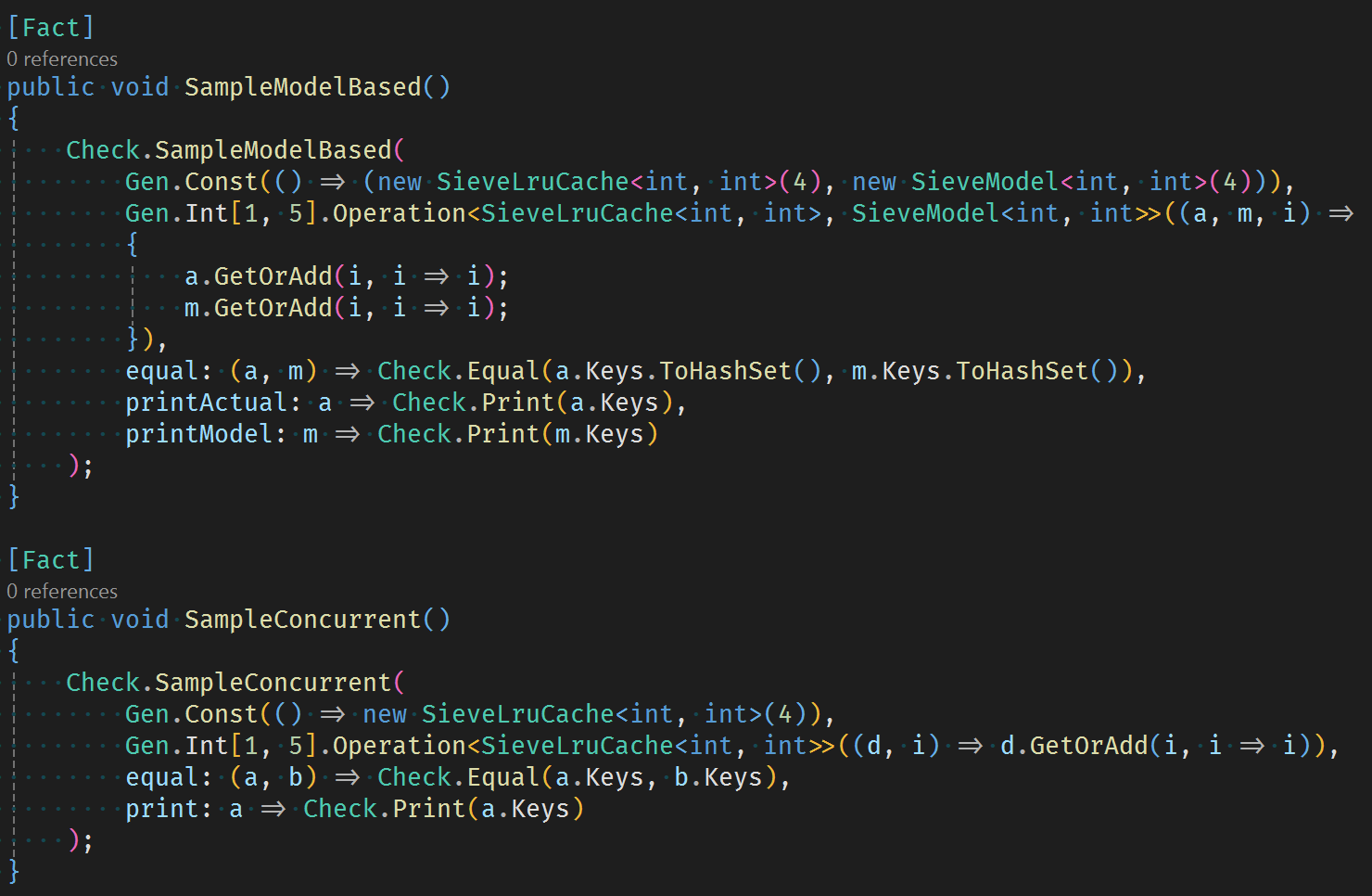

Testing

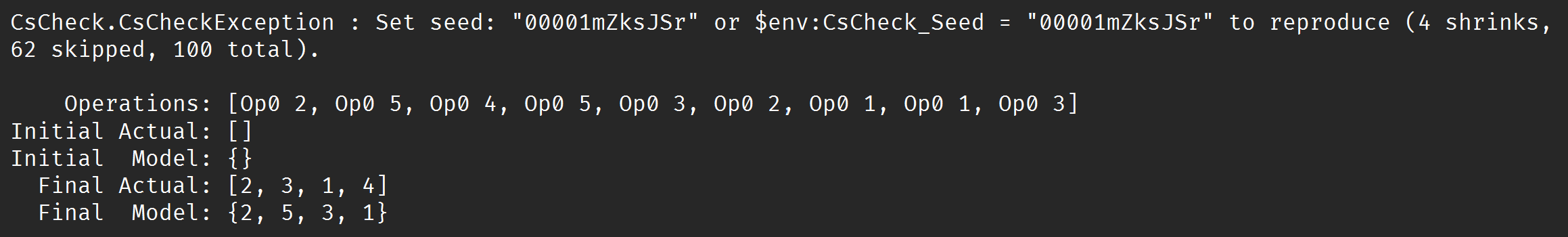

SampleModelBased example failure output:

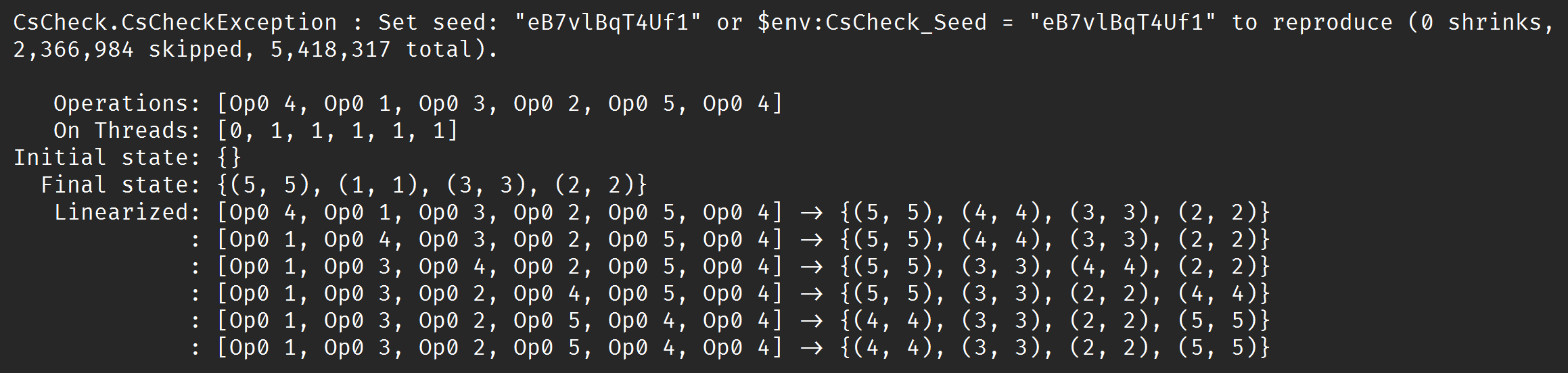

SampleConcurrent example failure output:

SIEVE paper

Cache.cs

CacheTests.cs

SieveLruCache.cs

SieveLruCacheTests.cs