In Defence of Doubles

01 Dec 2023I sometimes hear developers say you should always use decimals in financial applications. This surprises me as I've worked in this area for many years and would rarely if ever recommend using decimals. I'd argue doubles and fixed-point numbers are more sensible options.

The fear of doubles originates in part from the following misleading paragraph in the Microsoft floating-point numeric types reference.

See also other example discussions: 1, 2, 3. The implication here is that the required accuracy can only be achieved with decimals, and numeric performance is not often important in financial applications. These two points couldn't be further from the truth, and I'll aim to demonstrate this in the rest of the post.

Accuracy

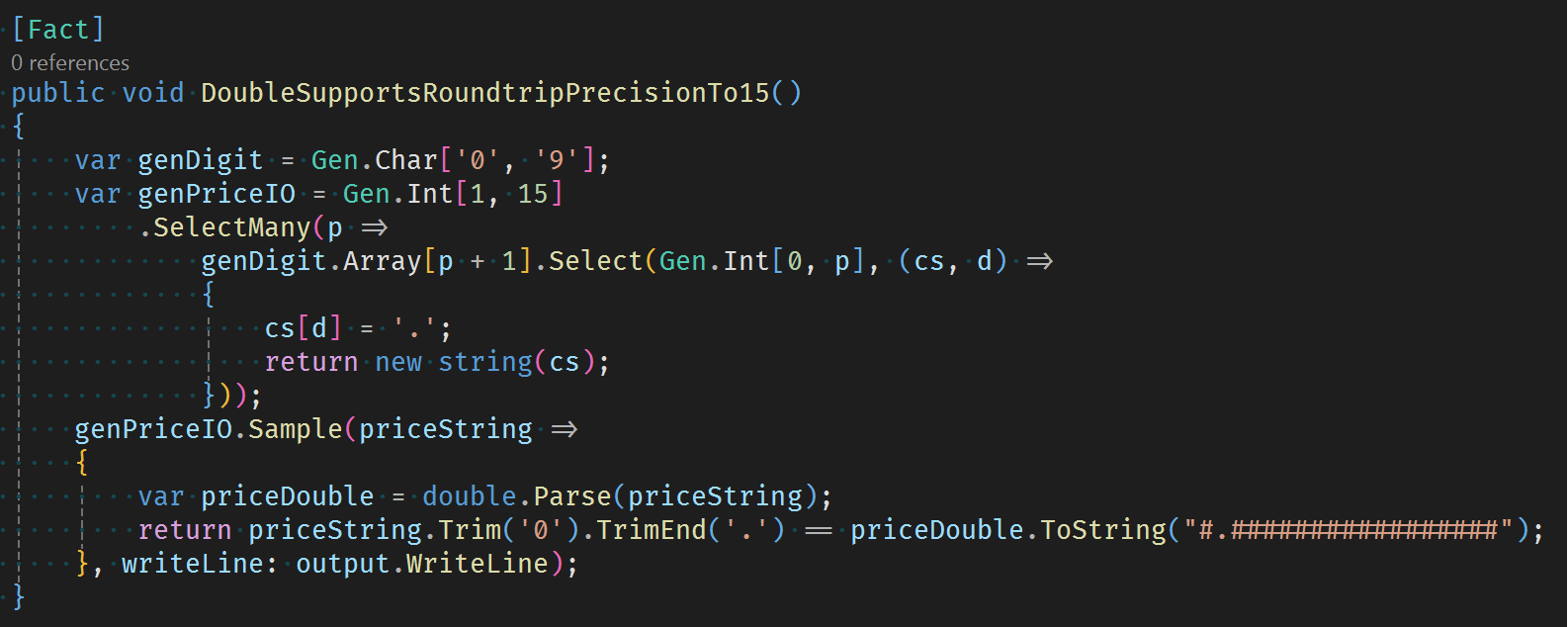

CsCheck can be used to investigate the accuracy of doubles.

This test models a price from a text feed and tests it agrees with what would be displayed after being stored as a double. The test demonstrates that doubles can hold and store faithfully numeric data up to 15 significate figures. This is more than enough for a price in a data model. Price timeseries would normally be stored as fixed-point values as they efficiently compress.

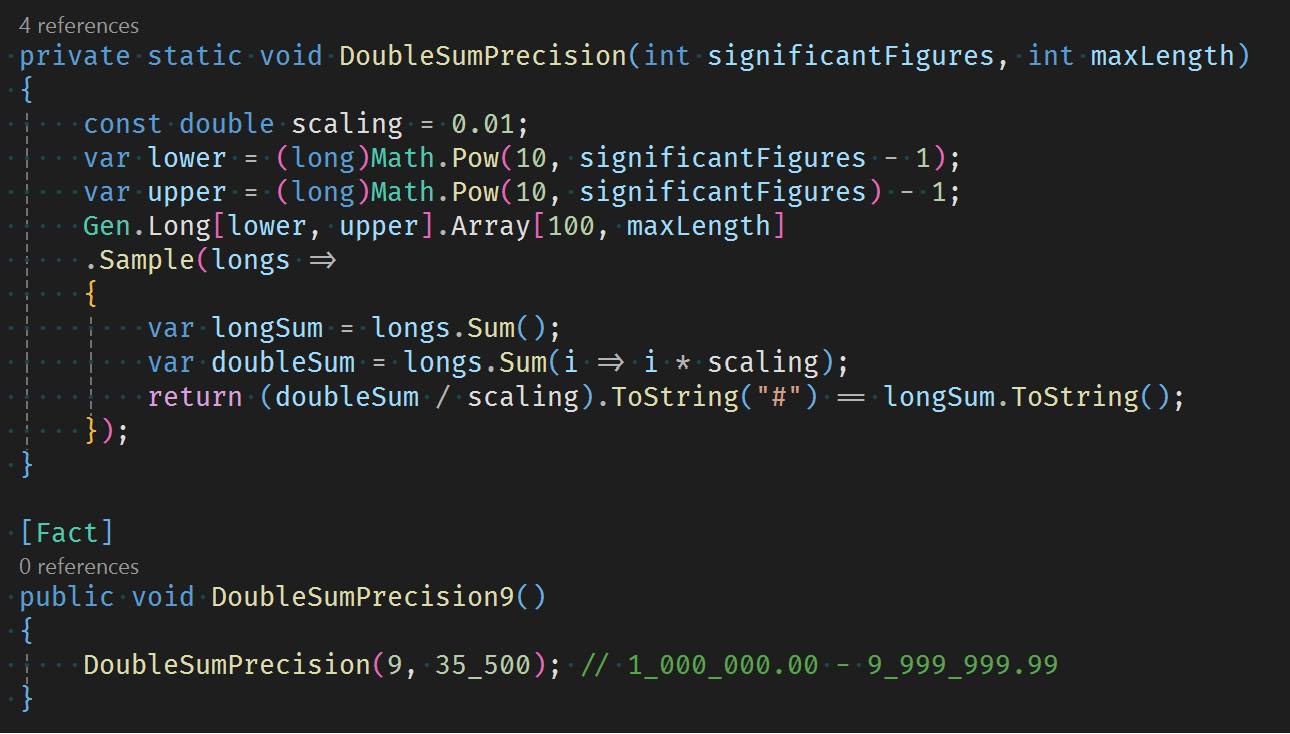

This test investigates the error in double sum calculations by comparing the exact result of a sum of longs.

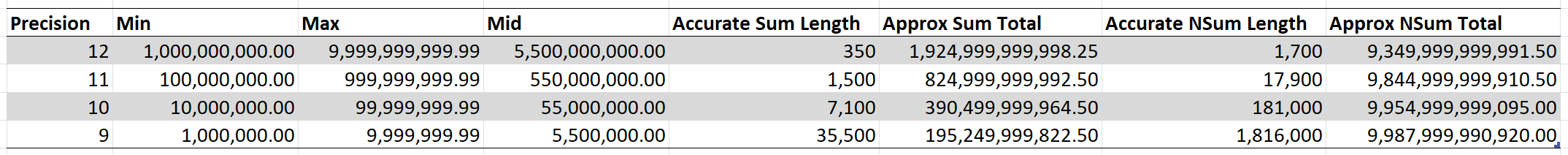

The table shows the length of random sets of doubles that are still free from rounding error. The numerical errors come from two components, firstly the fact that double doesn't store the exact number required, and secondly addition isn't an exact calculation. The second component can be eliminated by using NSum covered in a previous post. NSum is nice to have but is generally unnecessary, although I have found this kind of correction to be important in Allocate.

So, we are talking about thousands of doubles totalling many billions before we see a penny rounding issue.

This all makes sense since for example Excel also uses doubles to represent numbers. You can check this by summing a column of 350 9,999,999,999.99 value cells and comparing it to the product. If there really was an accuracy problem with doubles being used for storing or calculations financial professionals wouldn't use it. Or as a start they would ask for the sum implementation to be updated to NSum as Python 3.12 has recently done.

There are in fact many other more important sources of error than a few cents in the multiple billions. Errors in price marks, bid prices vs mid, exchange rate prices for foreign currencies.

You won't see an inconsistent total on a page or screen due to double accuracy as you can't view enough rows. It's only an imagined or mistaken risk by developers. Seen total errors can only come from an issue with the methodology such as not rounding after currency conversion.

If these calculations were to be exact to the penny, I would imagine the calculation methods would also become more complicated. For example, if you want to calculate the correct portfolio values in dollars you would need to sum each currency separately to the portfolio level, perform the currency conversion, round and then use Allocate to pro-rata back to the positions. Fees would require similar allocating to be done.

Performance

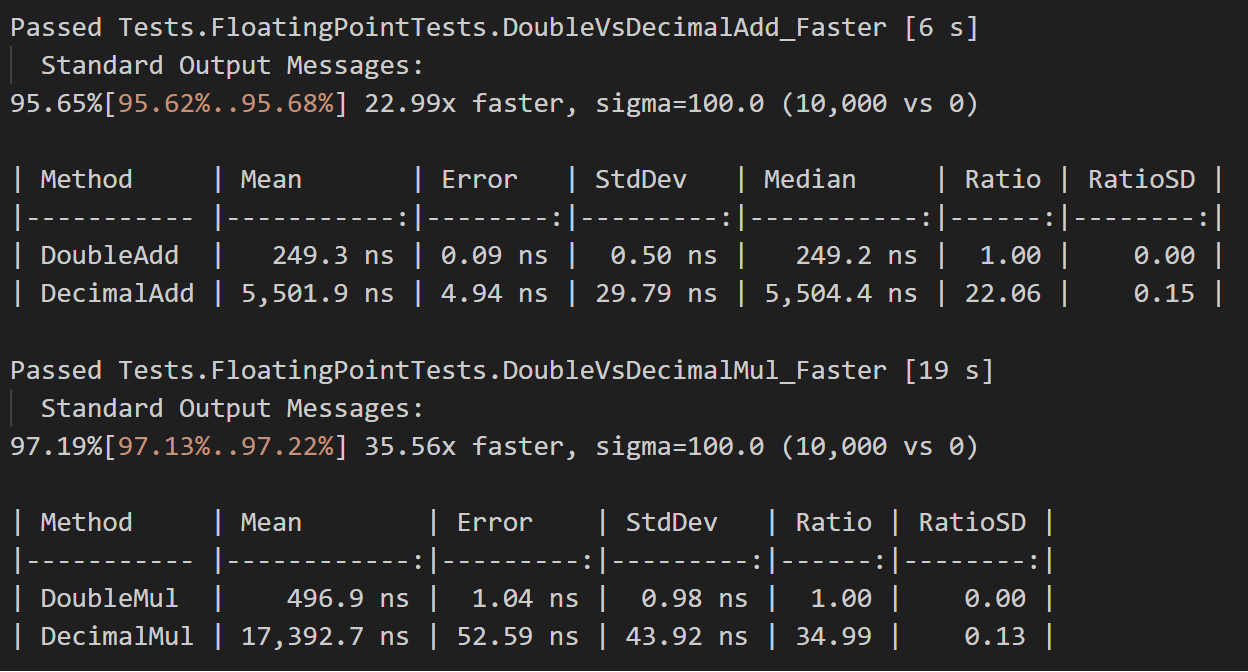

Decimals are 128-bit, twice the size of 64-bit doubles that fit in a register. Faster and BenchmarkDotNet reasonably agree that doubles are at least 22 times faster for addition and 35 times faster for multiplication. I've taken care to try to resolve the actual calculation performance difference minimising the memory access effect. Before doing this they both gave a range of performance ratios up to around 250 times faster.

The performance difference is not surprising as decimals are not supported natively by computer processors. When memory and CPU are combined for multiple calculations, this can be 50 to 100 times slower for decimals. They are a bit of a dog.

Valuation, profit and loss, returns and other accounting values can be coded as single pass sequence calculations from aggregated trade data. They are fast and can be performed on the fly giving functionality that is highly flexible. I've built a system like this in the past and covered some of these ideas in an old post.

This is far from what is usually seen. The norm is to calculate and store these values, often overnight, and to promote these derived values to actual data. This results in restricted functionality with clunky adjustment processes.

Performance of decimals vs doubles does matter. It turns a few 100 milliseconds on the fly calculation into an unworkable 10 seconds plus. It effects the architecture and features you can offer.

Implementation

There are two key implementation details that simplify handling numeric data in financial applications.

Firstly, eliminating primitive obsession. Longs, doubles, decimals, DateTime etc should not be being passed around. By creating struct types for quantity, price, trade date, time we can ensure that parsing, conversion, calculation, rounding and display are performed consistently without a performance penalty.

Secondly, a well-defined API boundary means we can store data in whatever format makes the most sense while at the same time loading and displaying in a different format. For example, quantity should always be stored as an int or long. A floating-point quantity doesn't make sense as it needs to be a quantised number you can exchange. The word quantum is derived from the Latin word quanta, which means a quantity. Dollar quantities should be stored in cents and priced as 0.01 USD. Multipliers used for loading and display are another important tool.

Together these mean we are in control and can evolve the system safely.

Conclusion

Doubles are the perfect fit for financial applications both in terms of accuracy and performance. Right-sizing in a flexible system will always give the best results.

Be a data-first architect. Have performance in mind throughout not just as an afterthought.

FloatingPointTests.cs

Data-First Architecture

Full precision floating-point summation in C#